Chatbots

Remove chatbots that eroticise children: Brazil tells Meta as tech giant lets AI sensualise kids

Context: Brazil’s recent demand for Meta to remove chatbots that eroticize children highlights the urgent need for regulation. Reports suggest that some AI personas on platforms like Instagram and WhatsApp were able to engage in inappropriate or suggestive conversations with minors, raising alarms about AI safety and ethics.

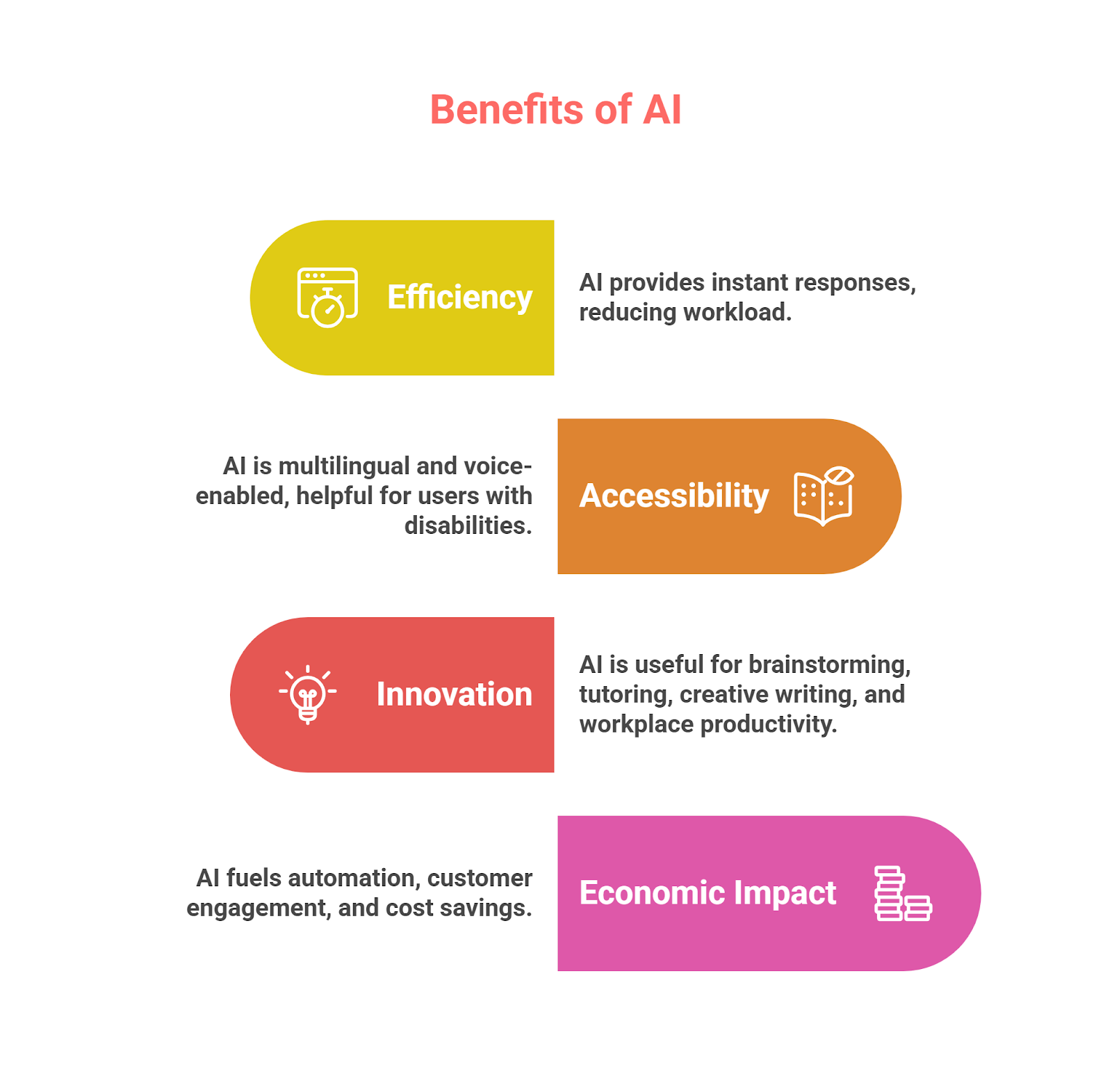

What are Chatbots?

These Bots are software programs designed to simulate human conversation, either through text or voice. They can be simple rule-based systems or advanced AI-driven models that use natural language processing (NLP) and machine learning to understand and respond to users. There are two main types:

- Task-oriented chatbots handle specific queries (e.g., customer support).

- Conversational AI chatbots enable more natural, open-ended dialogue (e.g., ChatGPT, Meta AI, Siri).

They’re used across websites, apps, smart devices, and social platforms to assist, entertain, or inform users.

How can these bots affect children?

- Exposure to Inappropriate Content: Some bots, especially those with weak safeguards, may generate sexualised, violent, or misleading responses.

- False Trust: Children may form parasocial relationships with bots, believing they are real friends or confidants.

- Mental Health Impact: Over-reliance on bots for emotional support can lead to isolation, confusion, or even harmful behaviour.

- Privacy Concerns: Kids may unknowingly share personal data, which could be stored or misused.

- Manipulation & Persuasion: AI bots can tailor responses based on user profiles, making them highly persuasive, even in dangerous ways.

Subscribe to our Youtube Channel for more Valuable Content – TheStudyias

Download the App to Subscribe to our Courses – Thestudyias

The Source’s Authority and Ownership of the Article is Claimed By THE STUDY IAS BY MANIKANT SINGH